How Azure AI Evolved in 2025 and What to Look Forward to in 2026

As 2025 comes to a close, it's worth pausing to reflect on what's been a transformative year for Azure AI. The shifts weren't subtle - they were seismic. The platform evolved from being a place to host models into something fundamentally different: a comprehensive ecosystem where AI agents collaborate, reason, and take action. Walking through enterprise deployments over the past twelve months, the difference between January and December felt like witnessing a decade of progress compressed into one year.

Remember the days when deploying a chatbot meant weeks of infrastructure setup, model training, and crossing fingers that it wouldn't hallucinate customer data? Those days feel like ancient history now. The landscape of Azure AI transformed so dramatically in 2025 that what once took months now happens in days - sometimes hours.

This wasn't just about faster deployments or shinier models. The industry moved from "AI that answers questions" to "AI that actually does things." Microsoft called it the agentic era, and Azure became the playground where these autonomous systems came to life.

Let's walk through what changed this year, what it means for building real solutions, and why architects should care.

From Models to Agents: The Big Shift

Think about the difference between a calculator and a personal assistant. A calculator responds only when you give it inputs—it computes and stops there. A personal assistant, on the other hand, understands context, remembers past conversations, pulls information from multiple sources, and takes action on your behalf.

That’s the leap Azure AI made in 2025.

It moved from being mostly a model hosting service to something much bigger: a platform for building and running AI agents. Instead of just responding to prompts, these systems can reason, coordinate steps, and interact with other services to get real work done.

Microsoft Foundry: The New Command Center

The biggest Azure AI announcement of the year came at Microsoft Ignite 2025, where Azure AI Studio evolved into Microsoft Foundry.This wasn’t just a rename. It reflected a complete rethink of how organizations build, deploy, and run AI systems.

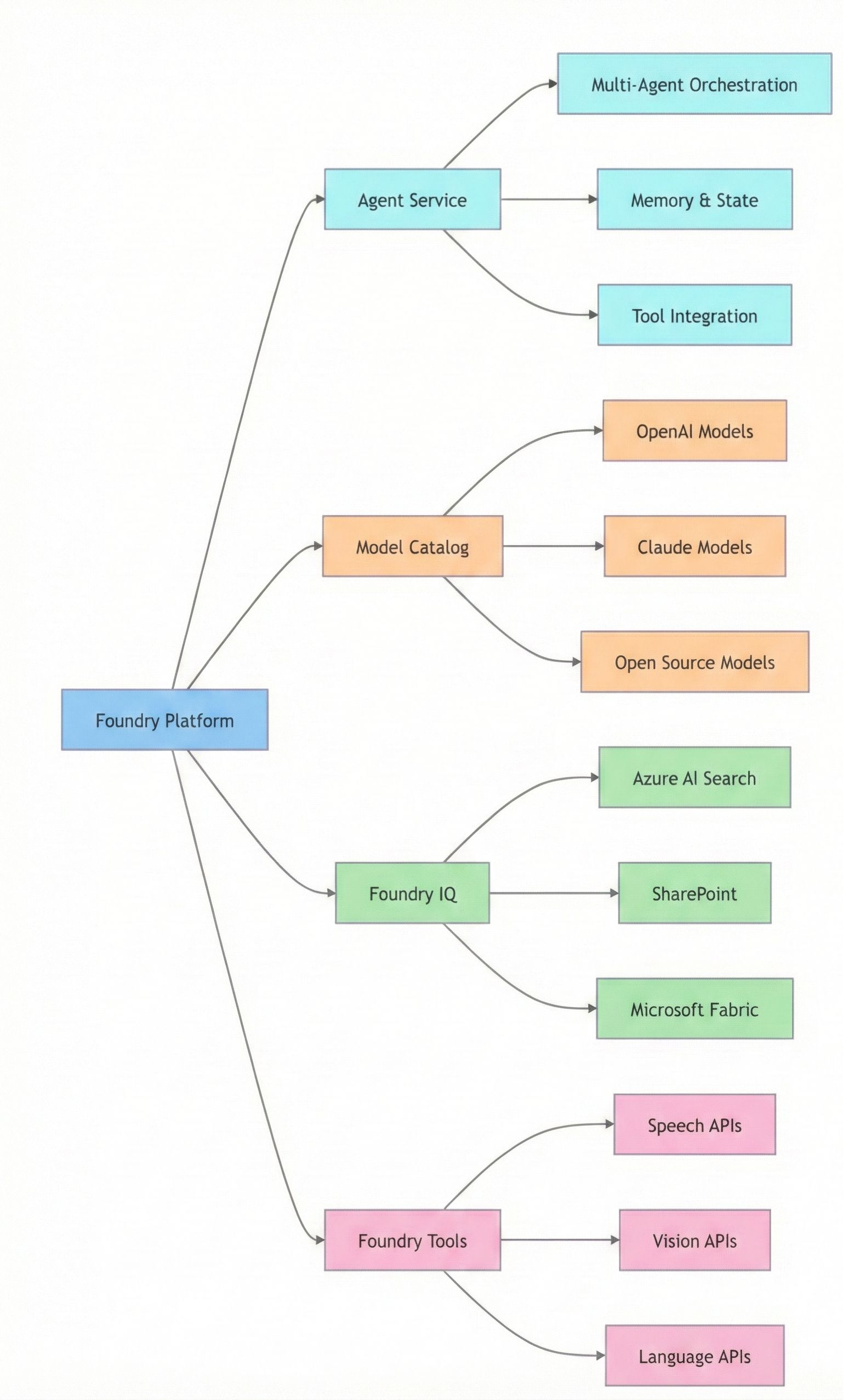

Foundry brings everything needed to build enterprise AI applications into one place.

Model Catalog

Over 11,000 models from OpenAI, Anthropic (Claude), Meta, Mistral AI, Cohere, xAI (Grok), and others. Think of it like a buffet: you can try GPT-4, Claude Opus, Llama, or Mistral side by side, compare results, and pick what fits your use case best.

Foundry Agent Service

The real star of the show. This managed service lets you build AI agents that can remember context, use tools, talk to other agents, and execute multi-step workflows. It reached general availability this year and quickly became the backbone of enterprise AI automation.

Foundry IQ

A knowledge layer built on Azure AI Search that gives agents secure access to enterprise data. It takes care of retrieval-augmented generation (RAG) while respecting user permissions. For many teams, building custom RAG pipelines from scratch simply stopped being necessary.

Foundry Tools

Pre-built capabilities for speech, translation, document processing, and vision. These act as ready-made building blocks that agents can use immediately - no custom model training required.

So how do all these pieces come together in practice?

The Agent Revolution: Multi-Agent Orchestration

One of the most exciting capabilities in Foundry Agent Service is multi-agent orchestration. Instead of building one massive AI system that tries to do everything - and usually doesn’t do any one thing particularly well - teams can now create smaller, specialized agents that work together.

Think about a customer support system.

You might have:

Triage Agent – Understands the customer’s issue and decides where it should go

Knowledge Agent – Searches documentation and past tickets for relevant information

Resolution Agent – Proposes solutions based on what the knowledge agent finds

Escalation Agent – Knows when to hand things over to a human

Each agent has a clear purpose and does its job well. They collaborate behind the scenes, sharing context through a single conversation thread and communicating using the Agent-to-Agent (A2A) protocol.

The result feels less like a chatbot answering questions - and more like a small team working together to solve a problem.

This kind of setup followed the Microsoft Agent Framework, which brought together Semantic Kernel and AutoGen into a single, unified SDK.

The framework handled a lot of the heavy lifting for you:

Persistent state management – Agents can remember conversation history across sessions

Tool integration – Agents can call APIs, run code, and query databases

Error handling – Built-in retries and fallback paths when things go wrong

Observability – End-to-end tracing of agent decisions and actions

Instead of wiring all this together yourself, the framework made these capabilities part of the default architecture - so teams could focus on what the agents should do, not on rebuilding plumbing every time.

Model Context Protocol: Opening the Doors

One of the smartest moves Microsoft made this year was embracing the Model Context Protocol (MCP). The easiest way to think about MCP is as a universal connector standard for AI agents.

Before MCP, things were messy. If an agent needed to check a calendar, send an email, or update a CRM record, developers had to write custom integration code for every single tool. MCP changes that. It gives agents a standard way to discover tools and use them - without bespoke plumbing each time.

Microsoft rolled out MCP support across GitHub Copilot, Copilot Studio, Azure AI Foundry, and even Windows 11. They also joined the MCP Steering Committee and contributed improvements to the specification, including stronger authentication patterns.

What this meant in practice was huge.

An agent built in Azure AI Foundry could use the same tools as one built with LangChain, Claude, or ChatGPT. Suddenly, agents weren’t locked into a single ecosystem anymore. The walls came down, and interoperability became the default.

Claude Meets Azure: The Partnership That Mattered

For years, Azure’s AI story revolved almost entirely around OpenAI models. That changed in a big way this year when Microsoft brought Claude from Anthropic into Microsoft Foundry.

Suddenly, Azure became the only major cloud platform offering both OpenAI and Anthropic models side by side - with the same enterprise-grade security, compliance, and governance controls.

That mattered because different models are good at different things.

OpenAI GPT-4 remained a strong choice for conversational experiences and creative writing

Claude Opus and Sonnet excelled at nuanced reasoning, following complex instructions, and analyzing large documents

Open-source models like Llama or Mistral offered cost-effective options for very specific tasks

Instead of arguing endlessly about “the best model,” teams could simply use the right model for the job.

Model Router

Foundry’s Model Router made this even easier. Developers could automatically route prompts to the most suitable model based on quality, latency, or cost. A customer support agent, for example, might use GPT-4 for friendly conversation but switch to Claude for complex policy questions that require careful reasoning.

The real win wasn’t cheaper tokens or shinier benchmarks. It was choice. Architects finally had the flexibility to design AI systems around business needs - not around a single model ecosystem.

Deep Research: When Agents Became Researchers

One feature that really showed what agentic AI could do was Deep Research, which entered public preview in July. This wasn’t an agent that simply searched the web and summarized results. It behaved much more like a real researcher.

When you gave Deep Research a question, it didn’t jump straight to an answer. Instead, it worked through the problem step by step.

What made it different was how it worked:

Planned an investigation – breaking a big question into smaller, answerable parts

Searched iteratively – using each finding to guide the next search

Synthesized across sources – connecting dots that a single search would miss

Validated findings – cross-checking information for accuracy

Produced auditable reports – with every claim linked back to a source

For example, ask it:

“How should recent semiconductor export restrictions affect investment strategy in tech companies?”

Deep Research might:

Look up policy announcements from multiple governments

Analyze financial filings from affected companies

Review analyst commentary from different sources

Pull everything together into a clear strategic assessment

This wasn’t just “web search with extra steps.” Under the hood, the agent used OpenAI’s o3-deep-research model, which brought genuine reasoning into the loop. It relied on Bing Search for authoritative sources and integrated with Azure Logic Apps to orchestrate the workflow.

Organizations started using Deep Research for things that previously took days or weeks:

Automating competitive intelligence

Producing in-depth market research reports

Analyzing regulatory changes and their business impact

Synthesizing insights from academic and industry research

It was one of the clearest signals in 2025 that AI agents weren’t just assistants anymore - they were capable of doing real analytical work, end to end.

Data Integration: Where Intelligence Met Information

Agents without access to real data are just expensive chatbots. Microsoft clearly understood this, and in 2025 invested heavily in connecting Foundry to enterprise data sources.

Azure AI Search with Agentic Retrieval

Traditional RAG (retrieval-augmented generation) systems are mostly keyword-driven. They search, return documents, and let the model figure things out. Agentic retrieval in Azure AI Search took a much smarter approach.

Instead of just matching words, it:

Understands the intent behind a question

Breaks complex queries into multiple searches

Pulls insights from across different documents

Automatically respects user access permissions

In early testing, Microsoft reported up to a 40% improvement in answer relevance for complex, multi-part questions. For enterprise scenarios, that difference is very noticeable.

Microsoft Fabric Integration

Fabric IQ added another important piece: a semantic layer over organizational data. Instead of agents dealing with raw tables and schemas, data was mapped to familiar business concepts.

So when an agent asked, “What was Q4 revenue?”, it automatically knew to look at:

Sales data from the CRM

Financial figures from the ERP

Operational metrics from production systems

All of this happened without developers writing complex joins, mappings, or transformation logic.

This is where things really clicked. Agents weren’t just smart anymore - they were informed. And once intelligence meets trusted enterprise data, AI stops being a demo and starts becoming genuinely useful.

Azure HorizonDB: PostgreSQL Reimagined

Another interesting addition to Azure’s data lineup this year was Azure HorizonDB. It’s a fully managed, PostgreSQL-compatible database built specifically with AI workloads in mind.

HorizonDB brought a few important capabilities together:

Built-in vector indexing using DiskANN for fast similarity searches

Native AI model integration, enabling in-database inference

Microsoft Fabric connectivity for unified access to enterprise data

The real impact was architectural. Teams no longer had to run a separate vector database alongside PostgreSQL just to support AI use cases. Transactional data and vector embeddings could live in the same place, simplifying design, operations, and cost.

For architects, this meant fewer moving parts - and one less “special database” to justify in production designs.

What to Watch in 2026

The pace of innovation hasn’t slowed. If anything, it’s accelerating. Here’s what’s coming next:

Extended data source support – Deep Research is expected to go beyond web search and include internal document repositories, enabling research across proprietary enterprise data.

Advanced multi-agent patterns – More sophisticated orchestration, including agent teams that form dynamically based on the task at hand.

Improved model fine-tuning – Simpler ways to adapt models using organizational data, while still preserving privacy and governance.

Foundry Local – The ability to run agents on-device for offline scenarios and improved privacy. It’s currently in private preview on Android and likely to expand in 2026.

Enhanced compliance tooling – Deeper integration with Microsoft Purview for data governance and regulatory compliance.

Practical Advice for Architects

After seeing hundreds of AI implementations this year, a few patterns showed up again and again.

Start with a real problem, not the technology. The teams getting the best results picked a painful, repetitive workflow and asked, “Could an agent help here?” - not “We should build an AI agent.” Design for collaboration early. Even if you begin with a single agent, assume others will join later. Use clear interfaces, shared state, and message-based communication from day one.

Invest in observability early. Agent debugging tools improved a lot this year, but comprehensive logging and tracing from the start still made the biggest difference. Don’t underestimate the data challenge either - agents are only as good as the information they can access, and data integration is usually most of the work. Embrace model diversity instead of locking into one provider. A single workflow might use OpenAI GPT-4 for creative tasks, Claude for deeper analysis, and a smaller model for classification. And finally, plan for governance early. As agent capabilities grow, so do risks - access controls, audit logs, and approval workflows are much easier to add upfront than under pressure later.

Final Thoughts

What happened in Azure AI this year wasn’t just a collection of new features. It marked a shift in how software gets built.

For decades, software followed explicit instructions: “If this condition is true, do that action.” Agents flip that model around: “Here’s the goal - figure out how to achieve it.”

That doesn’t make developers less important. If anything, it makes their role more critical. Someone still has to design the agent architecture, define boundaries and constraints, implement observability and governance, handle edge cases, and ensure security and compliance.

The tools changed. The responsibility didn’t. Building reliable systems that solve real problems is still the hard part - and it always will be.

Looking ahead to 2026, the focus will shift from proving that agents work to scaling them responsibly. Expect deeper integration with enterprise data, more advanced multi-agent coordination, better on-device and offline capabilities, and tighter governance woven directly into the platform. The question won’t be whether agents belong in production - it will be how far organizations are willing to trust them with real decisions.

The teams that succeed won’t be the ones chasing the newest models. They’ll be the ones who treat agents like any other critical system: designed thoughtfully, observed carefully, and evolved deliberately over time.

If you found this useful, tap Subscribe at the bottom of the page to get future updates straight to your inbox.