How to Size an AKS Cluster for Production

Sizing an AKS Cluster often feels like packing for a holiday when you don’t quite know what the weather will be like. Pack too light, and you’ll be unprepared when conditions change. Pack too heavy, and you end up carrying unnecessary baggage - which, in cloud terms, translates directly into wasted cost.

After working with dozens of organisations deploying AKS in real production environments, one thing becomes clear: while every workload is different, the mistakes people make during sizing are remarkably consistent. More importantly, there are repeatable patterns you can use to make sizing decisions with far more confidence than guesswork.

Understanding What You’re Actually Sizing

Before jumping into VM SKUs, node counts, or autoscaling thresholds, it’s worth stepping back and clarifying what an AKS cluster really is.

At its core, an AKS cluster is a fleet of virtual machines - called nodes - working together to run containerised workloads. Each node is a worker with a finite amount of CPU and memory. Your applications, packaged as containers, are the jobs those workers need to execute.

Sizing an AKS cluster, then, is not about picking “the right number” upfront. It’s about answering two fundamental questions:

How many workers do I need right now?

How much headroom do I need to safely handle growth, spikes, and failures?

Once you frame sizing this way, the problem becomes far more practical - and far less intimidating.

The Pillars of Cluster Sizing

1. Workload Requirements

Start with what you’re actually running. Each containerised application - running in a Kubernetes pod - consumes CPU and memory. A simple web frontend might need 0.5 vCPU and 512 MB RAM, while a data-processing service could require 2 vCPUs and 4 GB RAM.

In Kubernetes, this is expressed using resource requests. Requests define the minimum guaranteed resources for a pod and are the only values used by Kubernetes for scheduling decisions. When the scheduler decides which node can run a pod, it evaluates requests only - not limits.

You can optionally define limits, which cap how much CPU or memory a pod may consume at runtime. Limits are enforced after the pod is scheduled and do not increase schedulable capacity, so they do not factor into cluster sizing.

Example

A typical web application pod might define:

CPU request: 250m (0.25 vCPU)

Memory request: 256 MB

For sizing purposes, these are the only numbers that matter.

If you run 20 replicas of this pod, the cluster must provide at minimum:

CPU: 5 vCPUs (20 × 0.25)

Memory: 5 GB RAM (20 × 256 MB)

This is the baseline capacity required just to schedule these pods - before accounting for system overhead, headroom, or failures.

Key rule:

Clusters are sized for requests.

If limits are frequently hit, it’s a signal to fix the requests, not to size for limits.

2. High Availability and Fault Tolerance

Running a production workload on a single node is like having only one person in the organisation who knows how to perform a critical task. When they’re on leave - or worse, unavailable without notice - work simply stops.

AKS nodes are no different. They will fail: during platform maintenance, kernel upgrades, VM reboots, scaling events, or plain bad luck. Designing for availability means assuming failure as a normal condition, not an exception.

For production workloads, applications should be spread across at least three nodes. This ensures that when one node disappears, the remaining nodes can continue to serve traffic without interruption.

How Fault Tolerance Changes the Math

This is where cluster sizing quietly shifts from capacity planning to resilience planning.

Let’s say your traffic profile requires 10 replicas of a pod to operate comfortably under normal conditions. If you spread those replicas evenly across three nodes and one node fails, you immediately lose roughly a third of your capacity.

To survive that failure without degradation, you need spare headroom.

In practice, that means:

Baseline requirement: 10 replicas for steady-state traffic

Failure buffer: capacity for 2–3 additional replicas

Effective target: plan for 12–13 replicas across 3 or more nodes

Those extra replicas aren’t waste - they’re insurance. They absorb node failures, rolling upgrades, and sudden load spikes without forcing emergency scaling or customer-visible impact.

The Architectural Takeaway

High availability is not achieved by Kubernetes alone.

It’s achieved by over-provisioning with intent.

If your cluster only has enough capacity to run exactly what you need in the happy path, it is not highly available - it is fragile.

In AKS, resilience comes from:

Multiple nodes

Even pod distribution

And deliberate spare capacity to handle failure

Anything less is optimism dressed up as architecture.

3. Growth and Scaling Patterns

Applications never stay static. Traffic grows, features expand, and data volumes increase. If you size only for today, you’re already behind.

A cluster running at ~70% utilisation is healthy.

At ~95%, you’re one spike away from an incident.

Plan for how your application grows:

Gradual growth (steady user adoption):

Keep 20–30% headroom and revisit sizing periodically.Seasonal spikes (sales events, reporting cycles):

Design for 2–3× peak capacity and pre-scale where possible.Unpredictable growth (viral traffic, public APIs):

Autoscaling is mandatory - tune and test it, don’t just enable it.

Rule of thumb:

Size AKS for comfort, not survival. If the cluster is always “just enough,” failure is only a matter of time.

The Practical Sizing Process

Let’s walk through a realistic AKS sizing example for an e-commerce platform.

Application Inventory (Pod Requests)

Frontend:

10 replicas × (0.5 vCPU, 0.5 GB RAM)API:

15 replicas × (1 vCPU, 1 GB RAM)Background jobs:

5 replicas × (2 vCPU, 4 GB RAM)Database (stateful):

3 replicas × (4 vCPU, 8 GB RAM)

Step 1: Total Application Requests

CPU

(10×0.5) + (15×1) + (5×2) + (3×4) = 42 vCPUs

Memory

(10×0.5) + (15×1) + (5×4) + (3×8) = 64 GB

Step 2: Add System Overhead (Realistic AKS Reservations)

CPU: 42 × 1.10 ≈ 46 vCPUs

Memory: 64 × 1.25 = 80 GB

Step 3: Add Headroom (20%)

CPU: 46 × 1.2 ≈ 55 vCPUs

Memory: 80 × 1.2 = 96 GB

Step 4: Map to Node Size

Using Standard_D8s_v5 (8 vCPU, 32 GB RAM):

CPU-driven nodes:

55 ÷ 8 ≈ 7 nodesMemory-driven nodes (80% usable):

96 ÷ 25.6 ≈ 4 nodes

CPU is the limiting factor, so 7 nodes would technically satisfy the requirement.

But this is where experienced AKS design diverges from “just enough math” -

and where a better approach emerges.

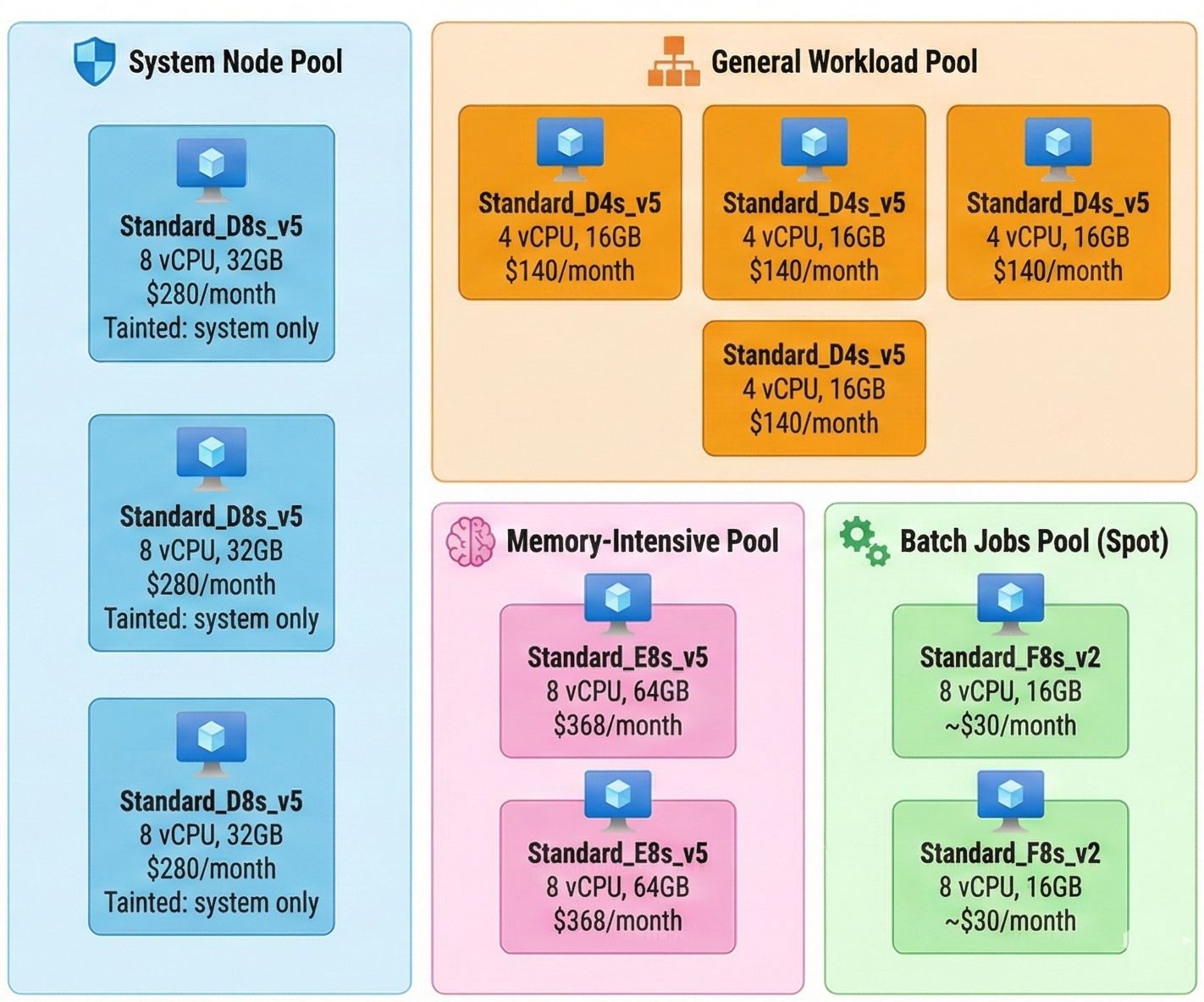

Node Pools: The Secret Weapon

A common AKS anti-pattern is building a single, uniform cluster and hoping it works for every workload. It rarely does.

Using multiple node pools lets you match workloads to the hardware they actually need. Think of it like building a team of specialists instead of expecting everyone to be a generalist. You get better performance, higher efficiency, and lower cost - all at the same time.

Match Workloads to the Right VM Series

Different workloads stress different resources. AKS lets you reflect this reality directly in your architecture.

Workload Type | Recommended VM Series | Why It Fits | RAM : vCPU |

|---|---|---|---|

System / Core Services | D-Series (D2s–D4s) | Balanced CPU and memory for cluster services | ~4:1 |

General Apps (Web / API) | D-Series (D4s–D8s) | Strong all-round performance for stateless workloads | ~4:1 |

Databases / Cache | E-Series (E4s–E16s) | High memory per core for stateful workloads | ~8:1 |

Batch / Compute | F-Series | Optimised for CPU-heavy processing | ~2:1 |

ML / AI Training | NC-Series / ND-Series | GPU-accelerated compute | Varies |

Why This Matters

Separating workloads into node pools gives you:

Independent scaling per workload type

Better bin-packing and higher utilisation

Lower blast radius when nodes fail or scale

Freedom to tune autoscaling and maintenance windows

Sizing the System Node Pool Correctly

The system node pool deserves special attention. This pool runs critical Kubernetes components like CoreDNS, metrics-server, and cluster monitoring agents. These aren't your application workloads - they're the infrastructure keeping everything running.

System workload characteristics:

Relatively stable resource consumption

Critical for cluster health (can't afford resource starvation)

Lower overall resource needs compared to applications

Should never run application pods (use taints to prevent this)

Microsoft's Official Sizing Guidance:

Minimum nodes: 3 nodes of 8 vCPUs each for production (or 2 nodes with at least 16 vCPUs each)

Recommended for typical production: 3 × Standard_D8s_v5 (8 vCPU, 32GB) or similar

Recommended for large/complex clusters: Standard_D16ds_v5 (16 vCPU, 64GB) with ephemeral OS disks

"Large clusters" means: multiple CoreDNS replicas, 3-4+ cluster add-ons, service mesh deployments, or clusters with hundreds of nodes

The key factor is cluster complexity, not just node count. A 20-node cluster running Istio service mesh with advanced monitoring needs more system capacity than a 50-node cluster running basic workloads.

Strategy for Our E-Commerce Example

System pool:

3 × Standard_D8s_v5 (8 vCPU, 32 GB) → $840/monthWeb / API pool:

4 × Standard_D4s_v5 (4 vCPU, 16 GB) → $560/monthBackground jobs pool (Spot):

3 × Standard_F8s_v2 (8 vCPU, 16 GB) → ~$90/month (~90% discount)Database pool (memory-optimised):

2 × Standard_E8s_v5 (8 vCPU, 64 GB) → $736/month

Total Monthly Cost

≈ $2,236

This setup prioritises system stability, clean workload isolation, and cost efficiency - appropriate for a production-grade AKS cluster with predictable traffic and standard observability requirements.

Azure Spot Instances: The Cost Game-Changer

For workloads that can tolerate interruptions, Azure Spot instances are one of the biggest cost levers available in AKS. They can deliver up to 90% savings compared to on-demand VMs.

The trade-off is simple: Azure can reclaim a Spot VM with as little as 30 seconds’ notice when capacity is needed elsewhere. If your workload is designed for that reality, the savings are absolutely worth it.

When to Use Spot Instances

Spot works best for workloads that are interruptible by design:

Batch data processing with checkpointing

CI/CD and build agents

Stateless background workers

Video encoding and image processing

Machine learning training jobs (with checkpoints)

These workloads expect restarts. Spot just makes those restarts cheaper.

When Not to Use Spot Instances

Spot is a bad fit for anything that assumes continuity:

User-facing web or API services

Databases or other stateful workloads

Real-time or low-latency pipelines

Any workload that cannot tolerate sudden termination

If losing a node causes customer impact, Spot is the wrong tool.

Back to Our E-Commerce Example

Those background jobs handling imports, exports, and data processing?

They’re ideal candidates for Spot.

By running that node pool on Spot instances, the cluster saves ~$780 per month on that pool alone - without touching availability or user experience.

Spot isn’t a gamble when used correctly.

It’s deliberate architectural leverage.

Auto-Scaling: Sizing for Reality, Not Worst Case

One of the most common AKS sizing mistakes is provisioning for absolute peak load 24/7. Real workloads don’t behave that way. Traffic fluctuates throughout the day, e-commerce spikes during sales, and batch workloads ramp up and down on demand. Clusters sized permanently for peak are simply wasteful.

The smarter approach is to size for baseline load - normal daily traffic - and rely on auto-scaling to absorb spikes.

AKS provides multiple layers of elasticity:

Horizontal Pod Autoscaler (HPA) scales pod replicas based on load.

Cluster Autoscaler adds or removes nodes when pods can’t be scheduled.

Node Autoprovisioning (for advanced scenarios) provisions the right VM sizes just-in-time based on pending pod requirements.

Cost Impact

Instead of running 10 nodes for peak capacity all month (~$1,400/month), size for a 4–5 node baseline (~$560–$700/month) and let auto-scaling handle bursts.

If peak load occurs only 20% of the time, the average monthly cost settles around ~$850/month - roughly 40% savings - while still delivering full performance during peaks.

This is where the headroom you build into initial sizing matters. That 20% buffer becomes the elastic space auto-scaling uses to respond quickly, without risking instability.

Auto-scaling turns capacity planning from a worst-case guess into a demand-driven model.

Final Thoughts

Sizing an AKS cluster is about balancing performance, reliability, cost, and growth. There’s no single right answer - different applications need different setups.

You don’t need to get it perfect on day one. You just need to avoid getting it wrong.

Start conservative, not minimal. A few well-sized nodes are better than one huge node or many tiny ones. Use node pools to separate workloads. Turn on autoscaling from the start. Use Spot instances for background or batch work. Monitor closely in the early months.

Most importantly, remember this: cloud infrastructure is not fixed. You can resize, scale, and adjust as you learn. Watch basic signals like CPU and memory, and also the quieter ones like IP usage and disk performance.

Good AKS sizing isn’t guesswork - it’s iterate, observe, adjust.

The goal is simple: reasonable costs, stable systems, and users who never notice the platform underneath.

If you found this useful, tap Subscribe at the bottom of the page to get future updates straight to your inbox.